The Development of artificial intelligence invloves contributions from numerous researchers and innovators over decades. It's challenging to attribute it's invention to a single individual, AI history can be seen back to the mid 20th century.

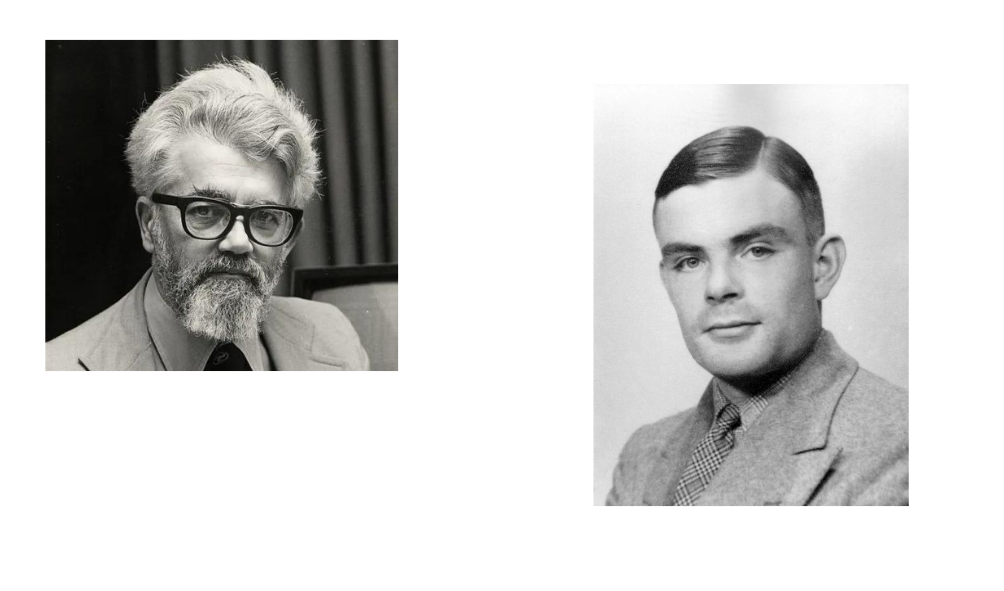

One of the AI pioneers was Alan Turing in the early 1950s. He is a British mathematician, logician, and computer scientist, who proposed the concept of theoretical computing device that could simulate any algorithmic computation. Alan Turing published his work "Computer Machinery and Intelligence" as known the Turing Test. Another Key Figure of AI is John McCarthy, a Computer Scientist who make the term "artificial intelligence" in 1956 and organized the Dartmouth Conference, which is widely considered the birthplace of AI as an academic field.

Dates of note:

- 1950: Alan Turing published “Computer Machinery and Intelligence” which proposed a test of machine intelligence called The Imitation Game.

- 1952: A computer scientist named Arthur Samuel developed a program to play checkers, which is the first to ever learn the game independently.

- 1955: John McCarthy held a workshop at Dartmouth on “artificial intelligence” which is the first use of the word, and how it came into popular usage.

Artificial General Intelligence 2012-present

Artificial General Intelligence (AGI), also known as Strong AI or Human-level AI, refers to AI systems that possess the ability to understand, learn, and apply knowledge across a wide range of tasks at a level comparable to human intelligence.

However, significant advancements have been made in various areas related to AGI research from 2012 to the present. Here are some key developments and trends during this period:

2012: Two researchers from Google (Jeff Dean and Andrew Ng) trained a neural network to recognize cats by showing it unlabeled images and no background information.

2015: Elon Musk, Stephen Hawking, and Steve Wozniak (and over 3,000 others) signed an open letter to the worlds’ government systems banning the development of (and later, use of) autonomous weapons for purposes of war.

2016: Hanson Robotics created a humanoid robot named Sophia, who became known as the first “robot citizen” and was the first robot created with a realistic human appearance and the ability to see and replicate emotions, as well as to communicate.

2017: Facebook programmed two AI chatbots to converse and learn how to negotiate, but as they went back and forth they ended up forgoing English and developing their own language, completely autonomously.

2018: A Chinese tech group called Alibaba’s language-processing AI beat human intellect on a Stanford reading and comprehension test.

2019: Google’s AlphaStar reached Grandmaster on the video game StarCraft 2, outperforming all but .2% of human players.

2020: OpenAI started beta testing GPT-3, a model that uses Deep Learning to create code, poetry, and other such language and writing tasks. While not the first of its kind, it is the first that creates content almost indistinguishable from those created by humans.

2021: OpenAI developed DALL-E, which can process and understand images enough to produce accurate captions, moving AI one step closer to understanding the visual world.

So How AI in the future for us?

Well, we can never entirely predict the future. However, many leading experts talk about the possible futures of AI, so we can make educated guesses. We can expect to see further adoption of AI by businesses of all sizes, changes in the workforce as more automation eliminates and creates jobs in equal measure, more robotics, autonomous vehicles, and so much more.